You’ve learned the fundamentals of machine learning and built your first model. Now you’re facing a crucial question: which algorithm should you actually use for your problem? The number of options can feel overwhelming.

Choosing the right machine learning model type makes the difference between a project that works and one that wastes weeks of your time. Different algorithms excel at different tasks. Using regression when you need classification or vice versa dooms your project from the start.

The good news is that machine learning model types fall into clear categories based on what you’re trying to predict. Once you understand these categories and what problems they solve, choosing the right algorithm becomes straightforward. Let me break down the main types and show you exactly when to use each one.

Regression models for predicting numbers

Regression models predict continuous numerical values. Use regression when your target is a number that can take any value within a range. House prices, temperatures, sales figures, and distances all call for regression.

The key characteristic is that your output is a measurement on a continuous scale. Predicting that a house will cost 275,342 dollars or that tomorrow’s temperature will be 72.3 degrees requires regression.

Linear regression is the simplest and often the best starting point. It assumes a straight line relationship between features and the target. Despite its simplicity, linear regression works surprisingly well for many real problems.

from sklearn.linear_model import LinearRegression

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split

# Generate sample data

X, y = make_regression(n_samples=100, n_features=3, noise=10, random_state=42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Train linear regression

model = LinearRegression()

model.fit(X_train, y_train)

# Make predictions

predictions = model.predict(X_test)

print(f"Sample predictions: {predictions[:5]}")

Decision tree regression handles non-linear relationships better than linear models. Trees split your data based on feature values, creating a hierarchy of decisions that leads to predictions.

Random forest regression combines many decision trees for better accuracy and reduced overfitting. Each tree makes a prediction and the final output is the average of all trees.

Support vector regression works well for smaller datasets and can capture complex patterns. Neural networks handle very complex non-linear relationships but need lots of data and computing power.

For most regression problems, start with linear regression. If it doesn’t work well, try random forests. This approach covers the majority of real world use cases.

Classification models for predicting categories

Classification models predict which category or class something belongs to. Use classification when your target is a discrete label rather than a number. Spam or not spam, cat or dog, fraudulent or legitimate all need classification.

The output is a category from a predefined set. You’re not predicting how much or how many. You’re predicting which type or which group.

Binary classification handles two classes like yes/no or positive/negative. Multi-class classification handles three or more classes like predicting which species of flower or which type of customer.

Logistic regression is the go-to algorithm for binary classification despite its confusing name. It predicts the probability of belonging to each class and assigns the instance to the most likely one.

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import make_classification

from sklearn.metrics import accuracy_score

# Generate classification data

X, y = make_classification(n_samples=100, n_features=4, n_classes=2, random_state=42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Train logistic regression

model = LogisticRegression()

model.fit(X_train, y_train)

# Predict and evaluate

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy:.2f}")

Decision trees work for classification just like regression. They split data based on features to separate classes. Random forests improve on single trees by combining many of them.

Support vector machines find the best boundary separating your classes. They work particularly well when classes are clearly separable and you have moderate amounts of data.

Naive Bayes is fast and works well for text classification like spam detection. It assumes features are independent and uses probability to classify new instances.

Neural networks can learn very complex decision boundaries but need substantial training data. For image classification or when you have hundreds of thousands of examples, neural networks often work best.

Start with logistic regression for binary classification or random forests for multi-class problems. These cover most scenarios and train quickly enough for experimentation.

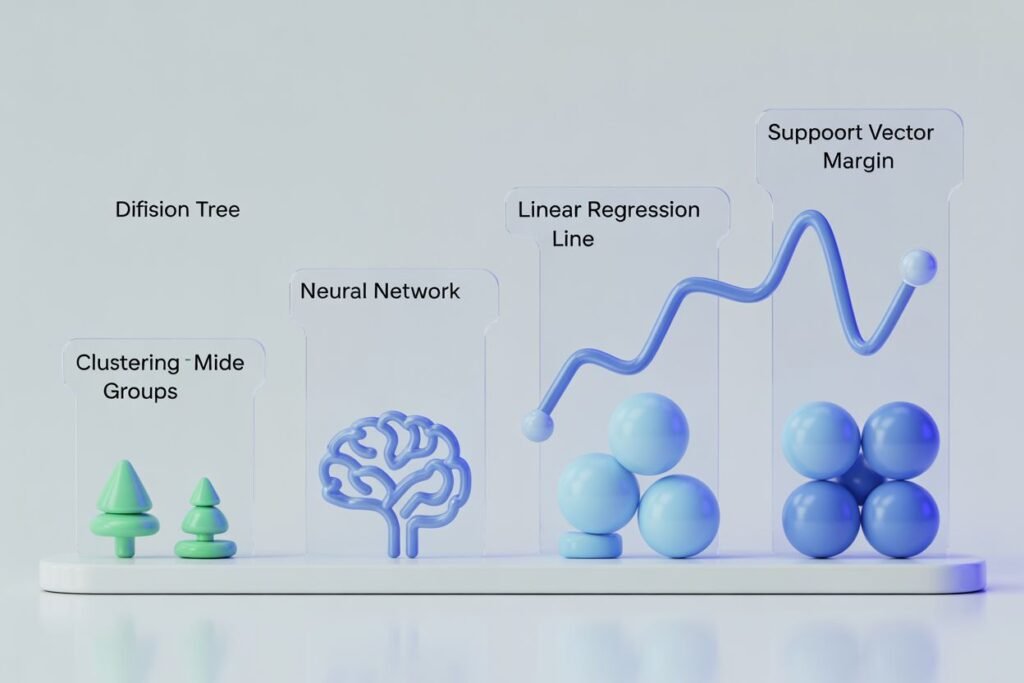

Clustering models for finding groups

Clustering finds natural groupings in your data without predefined labels. Use clustering when you want to discover patterns or segments but don’t have labels telling you what groups exist.

Unlike regression and classification where you have targets to predict, clustering is unsupervised. You give the algorithm unlabeled data and it discovers structure on its own.

Customer segmentation is a classic clustering use case. You have data about customer behavior but no predefined customer types. Clustering reveals groups of similar customers you can target differently.

K-means is the most popular clustering algorithm. You specify how many clusters you want and it assigns each data point to the nearest cluster center. It’s fast and works well when clusters are roughly spherical.

from sklearn.cluster import KMeans

import numpy as np

# Generate sample data

X = np.random.randn(100, 2)

# Perform k-means clustering

kmeans = KMeans(n_clusters=3, random_state=42)

labels = kmeans.fit_predict(X)

print(f"Cluster assignments: {labels[:10]}")

print(f"Cluster centers:\n{kmeans.cluster_centers_}")

Hierarchical clustering builds a tree of clusters without needing to specify the number upfront. You can cut the tree at different levels to get different numbers of clusters.

DBSCAN finds clusters of arbitrary shape and automatically identifies outliers. It works well when clusters have irregular boundaries or when you have noise in your data.

Gaussian mixture models assume data comes from multiple Gaussian distributions. They provide soft clustering where points can partially belong to multiple clusters.

The challenge with clustering is evaluating results since you don’t have ground truth labels. Silhouette scores and visual inspection help determine if your clusters make sense.

How to choose between model types

The decision tree for choosing machine learning model types is simpler than you might think. Start by asking what you’re trying to predict.

Predicting a number? Use regression. Linear regression for a starting point, random forests if you need better performance.

Predicting a category? Use classification. Logistic regression for two classes, random forests for multiple classes.

Discovering patterns without labels? Use clustering. K-means is usually your best bet unless you have irregular cluster shapes.

Beyond the basic type, consider your data characteristics. How many examples do you have? Hundreds work fine for simpler models. Neural networks need thousands or millions.

How many features? Dozens work for most algorithms. Hundreds or thousands might need dimensionality reduction or algorithms that handle high dimensions well.

Is your relationship linear or complex? Linear models work great when relationships are straightforward. Trees and neural networks handle complex non-linear patterns.

Do you need to explain predictions? Linear models and decision trees are interpretable. Neural networks are black boxes that are hard to explain.

How much computing power do you have? Simple models train in seconds on a laptop. Deep neural networks might need GPUs and hours or days of training time.

Practical considerations for real projects

Theory is useful but real projects involve additional factors. Data quality matters more than algorithm choice. A simple model on clean, relevant data beats a sophisticated model on messy, poorly chosen features.

Start simple and add complexity only when needed. Linear regression or logistic regression should be your first try for most problems. These establish a baseline to beat with fancier algorithms.

Try multiple algorithms and compare their performance. Train a linear model, a tree-based model, and maybe a neural network if you have enough data. Use cross validation to get reliable performance estimates.

Feature engineering often improves results more than switching algorithms. Creating new features from existing ones, combining variables, or transforming them can dramatically boost performance with the same model.

Don’t obsess over squeezing out every last bit of accuracy. A model that’s 2 percent less accurate but trains in one tenth the time might be the better choice for production.

Consider maintenance and deployment. Complex models are harder to update and monitor. Simpler models have fewer things that can break and are easier to debug when something goes wrong.

Common algorithm choices by problem type

Let me give you specific recommendations for common machine learning problems you’ll encounter.

Predicting prices or sales: Start with linear regression. Try random forest if you need better accuracy. Gradient boosting if you need the absolute best performance and have time to tune it.

Spam detection or sentiment analysis: Logistic regression with text features works well. Naive Bayes is fast for text. Neural networks if you have massive datasets.

Image classification: Convolutional neural networks are the standard. Transfer learning with pre-trained models saves training time.

Customer segmentation: K-means for simple, fast segmentation. Hierarchical clustering if you want to explore different numbers of segments.

Fraud detection: Random forests handle imbalanced data well. Anomaly detection algorithms like isolation forests for finding unusual patterns.

Recommendation systems: Collaborative filtering for user-item recommendations. Neural networks for complex recommendation scenarios.

Time series forecasting: ARIMA for traditional statistical approach. LSTM neural networks for complex temporal patterns.

Testing and validating your choice

Once you pick a model type, validate that it actually works for your problem. Split your data into training, validation, and test sets. Never evaluate on data the model saw during training.

Use cross validation to get robust performance estimates. Train on multiple different splits of your data and average the results. This prevents you from getting lucky or unlucky with a single split.

Compare your model’s performance to simple baselines. Does your sophisticated algorithm actually beat just predicting the average? If not, something is wrong.

Look beyond accuracy. Precision, recall, F1 score, and confusion matrices give you a complete picture for classification. R-squared and residual plots help diagnose regression models.

Check for overfitting by comparing training and validation performance. If training accuracy is much higher than validation accuracy, your model memorized rather than learned.

Understanding machine learning model types and when to use each one removes much of the mystery from algorithm selection. You now have a framework for choosing the right tool for your specific problem. Ready to see these different model types in action? Check out our guide on understanding data in machine learning to learn how to prepare your data properly for whatever algorithm you choose.