You’ve built neural networks and trained them successfully. But you’re making critical decisions almost by default: using Adam optimizer because it’s popular, categorical crossentropy because that’s what the tutorial used, and ReLU activation because everyone recommends it. Do you actually know why these choices make sense?

Understanding how to choose the right optimizer, loss function, and activation function for your neural network separates random experimentation from informed design. These core decisions profoundly impact training speed, final performance, and whether your model even converges. Making the wrong choices can waste hours or days on training that goes nowhere.

Neural network optimization requires matching your configuration to your specific problem. Binary classification needs different settings than regression. Small datasets require different approaches than massive ones. Let me show you how to make these choices intelligently rather than copying what worked for someone else’s different problem.

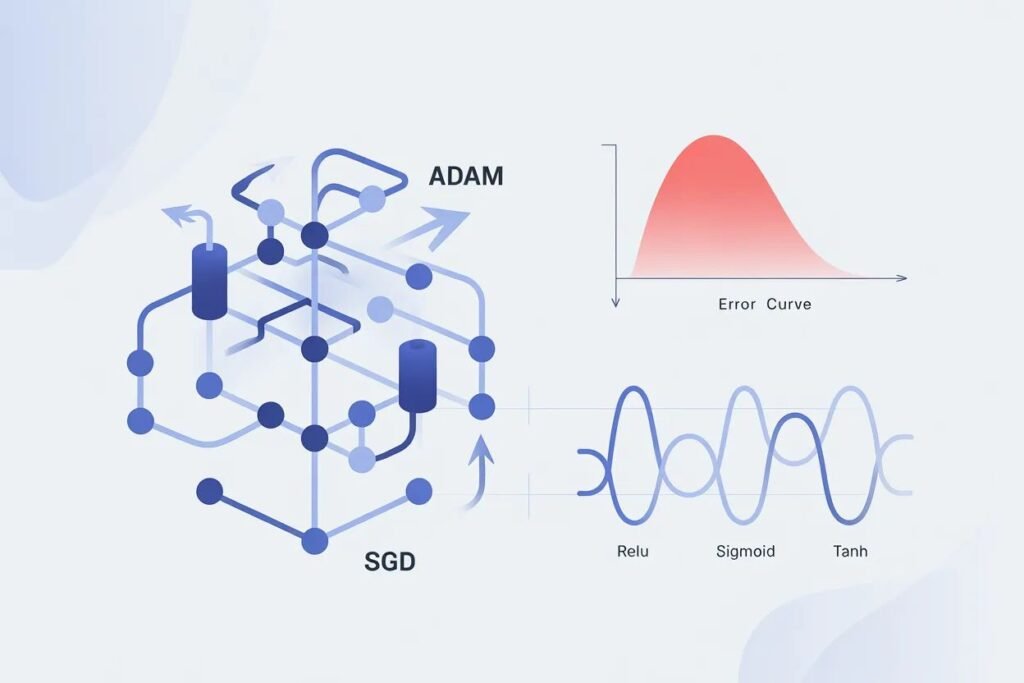

Understanding optimizer differences

Optimizers control how weights update during training using gradients from backpropagation. Different optimizers handle this process differently, affecting training speed and final model quality.

Stochastic Gradient Descent or SGD is the simplest optimizer. It updates weights in the direction opposite to the gradient, scaled by the learning rate. That’s it. New weight equals old weight minus learning rate times gradient.

SGD is simple but has limitations. It uses the same learning rate for all parameters. It can get stuck in saddle points where gradients are small. It requires careful learning rate tuning.

Despite these issues, SGD with momentum often reaches the best final performance, especially with proper tuning. Momentum helps SGD accelerate through consistent gradient directions and dampen oscillations.

from tensorflow import keras

from tensorflow.keras import optimizers

import numpy as np

# SGD optimizer

sgd = optimizers.SGD(learning_rate=0.01, momentum=0.9)

# SGD often needs lower learning rates

sgd_slow = optimizers.SGD(learning_rate=0.001, momentum=0.9)

print("SGD with momentum configuration")

Adam is the most popular optimizer for good reason. It adapts learning rates for each parameter individually based on estimates of first and second moments of gradients. This makes it work well across many problems with minimal tuning.

Adam combines ideas from momentum and RMSprop. It maintains running averages of gradients and squared gradients. These averages help it adapt learning rates smartly for each parameter.

The main advantage is that Adam often works well with default settings. Learning rate of 0.001 is a good starting point for most problems. This makes it ideal when you’re experimenting or don’t have time for extensive tuning.

# Adam optimizer (most common choice)

adam = optimizers.Adam(learning_rate=0.001)

# Adam with custom settings

adam_custom = optimizers.Adam(

learning_rate=0.001,

beta_1=0.9,

beta_2=0.999,

epsilon=1e-07

)

print("Adam optimizer ready")

RMSprop is similar to Adam but simpler. It adapts learning rates based on a moving average of squared gradients. This helps with non-stationary objectives and works well for recurrent neural networks.

AdaGrad adapts learning rates based on the history of gradients for each parameter. Parameters with large gradients get smaller learning rates. This works well for sparse data but can make learning rates too small over time.

When to use which optimizer? Start with Adam for most problems. It’s robust and requires minimal tuning. Use SGD with momentum when you have time to tune learning rates carefully and want potentially better final performance. Use RMSprop for recurrent networks if Adam doesn’t work well.

# Comparing optimizers on same model

model_adam = keras.Sequential([

keras.layers.Dense(128, activation='relu', input_shape=(784,)),

keras.layers.Dense(10, activation='softmax')

])

model_sgd = keras.models.clone_model(model_adam)

model_adam.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model_sgd.compile(optimizer=optimizers.SGD(learning_rate=0.01, momentum=0.9),

loss='categorical_crossentropy', metrics=['accuracy'])

print("Models ready for optimizer comparison")

Choosing the right loss function

Loss functions measure how wrong your predictions are. Different problems require different loss functions that match the nature of the task.

Mean Squared Error or MSE is the standard loss for regression. It calculates the average of squared differences between predictions and true values. Squaring penalizes large errors more heavily than small ones.

Use MSE when predicting continuous values like prices, temperatures, or distances. It works well when you care about getting close to the exact value and want to penalize large errors heavily.

# MSE for regression

model_regression = keras.Sequential([

keras.layers.Dense(64, activation='relu', input_shape=(10,)),

keras.layers.Dense(1) # Single output, no activation

])

model_regression.compile(

optimizer='adam',

loss='mse',

metrics=['mae']

)

print("Regression model with MSE loss")

Mean Absolute Error or MAE is another regression loss. It calculates the average of absolute differences. MAE treats all errors equally regardless of magnitude, unlike MSE which squares them.

Use MAE when you want to treat all errors the same or when your data has outliers that you don’t want to dominate the loss. MAE is less sensitive to extreme values than MSE.

Binary Crossentropy is the standard loss for binary classification with two classes. It measures the difference between predicted probabilities and true binary labels.

Use binary crossentropy when you have exactly two classes and your output layer uses sigmoid activation producing values between 0 and 1. This combination works together properly.

# Binary classification

model_binary = keras.Sequential([

keras.layers.Dense(64, activation='relu', input_shape=(20,)),

keras.layers.Dense(1, activation='sigmoid') # Single output with sigmoid

])

model_binary.compile(

optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy']

)

print("Binary classification with binary crossentropy")

Categorical Crossentropy handles multi-class classification with more than two classes. It works with one-hot encoded labels where each sample has a vector of zeros with a single one indicating the true class.

Use categorical crossentropy with softmax activation in the output layer. The output should have one neuron per class, and softmax converts outputs to probabilities summing to 1.

# Multi-class classification

model_multiclass = keras.Sequential([

keras.layers.Dense(128, activation='relu', input_shape=(784,)),

keras.layers.Dense(10, activation='softmax') # 10 classes

])

model_multiclass.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']

)

print("Multi-class classification with categorical crossentropy")

Sparse Categorical Crossentropy is similar but works with integer labels instead of one-hot encoded vectors. If your labels are integers from 0 to num_classes minus 1, use sparse categorical crossentropy.

This saves memory when you have many classes because you don’t need to create large one-hot vectors. The math works out identically to regular categorical crossentropy.

Custom loss functions handle specialized cases. You can define any differentiable function that takes true and predicted values and returns a scalar loss. This lets you incorporate domain knowledge into training.

Selecting activation functions

Activation functions introduce non-linearity that lets networks learn complex patterns. Different activations have different properties affecting training and performance.

ReLU or Rectified Linear Unit is the default choice for hidden layers. It outputs the input if positive, otherwise outputs zero. Mathematically it’s max(0, x).

ReLU is simple, fast to compute, and prevents vanishing gradients that plagued earlier activations like sigmoid. It works well in most cases and should be your default for hidden layers.

# ReLU in hidden layers (standard approach)

model_relu = keras.Sequential([

keras.layers.Dense(128, activation='relu', input_shape=(100,)),

keras.layers.Dense(64, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

print("Model using ReLU activations")

Leaky ReLU addresses one ReLU limitation. Regular ReLU outputs zero for negative inputs, which can cause “dead” neurons that never activate. Leaky ReLU outputs a small negative value instead, keeping gradients alive.

Use Leaky ReLU if you experience many dead neurons with regular ReLU. The leak coefficient is typically 0.01, meaning negative inputs get multiplied by 0.01 rather than becoming zero.

Sigmoid squashes inputs to a range between 0 and 1. It’s useful for output layers in binary classification where you want to interpret outputs as probabilities.

Don’t use sigmoid in hidden layers of deep networks. It suffers from vanishing gradients because the derivative is very small for large positive or negative inputs. ReLU works much better for hidden layers.

# Sigmoid for binary classification output

model_sigmoid_output = keras.Sequential([

keras.layers.Dense(64, activation='relu', input_shape=(20,)),

keras.layers.Dense(1, activation='sigmoid') # Binary output

])

print("Sigmoid activation in output layer only")

Tanh is similar to sigmoid but outputs values between negative 1 and 1. It’s centered at zero which can help in some cases. Like sigmoid, it’s mostly replaced by ReLU for hidden layers.

Softmax is essential for multi-class classification output layers. It converts a vector of numbers into probabilities that sum to 1. Each output represents the probability of one class.

Use softmax only in output layers for multi-class problems. Combine it with categorical crossentropy loss. Never use softmax in hidden layers.

# Softmax for multi-class output

model_softmax_output = keras.Sequential([

keras.layers.Dense(128, activation='relu', input_shape=(784,)),

keras.layers.Dense(10, activation='softmax') # 10-class output

])

print("Softmax activation for multi-class classification")

Decision flowchart for configuration

When configuring a neural network, follow this decision process.

For the problem type: regression uses MSE or MAE loss with no output activation or ReLU. Binary classification uses binary crossentropy loss with sigmoid output activation. Multi-class classification uses categorical crossentropy loss with softmax output activation.

For hidden layers: use ReLU activation as the default. Try Leaky ReLU if you have issues with dead neurons. Avoid sigmoid and tanh in hidden layers.

For optimizers: start with Adam at learning rate 0.001. It works well for most problems. Try SGD with momentum if you have time to tune and want potentially better final performance.

These defaults work for probably 80 percent of problems. The remaining 20 percent might need specialized configurations based on specific requirements or data characteristics.

Testing configurations systematically

Don’t just accept defaults without testing. Try a few configurations and compare results. Train the same model with Adam and SGD. Compare MSE and MAE for regression. See if Leaky ReLU improves on ReLU.

Use validation data to compare fairly. The configuration with best validation performance wins. Don’t overtune to validation data though, or you’ll overfit to your validation set.

Keep configurations simple initially. Get a baseline working with standard choices. Then experiment with one change at a time so you know what actually helps.

Neural network optimization through proper choice of optimizers, loss functions, and activation functions makes the difference between models that work and models that excel. Understanding these building blocks lets you design networks intelligently for your specific problems.

Ready to put everything together and understand neural networks from the ground up? Check out our complete guide on what is a neural network to see how all these components fit together into the architecture that powers modern deep learning.